Master's Thesis: Natural-Language Robot Manipulation

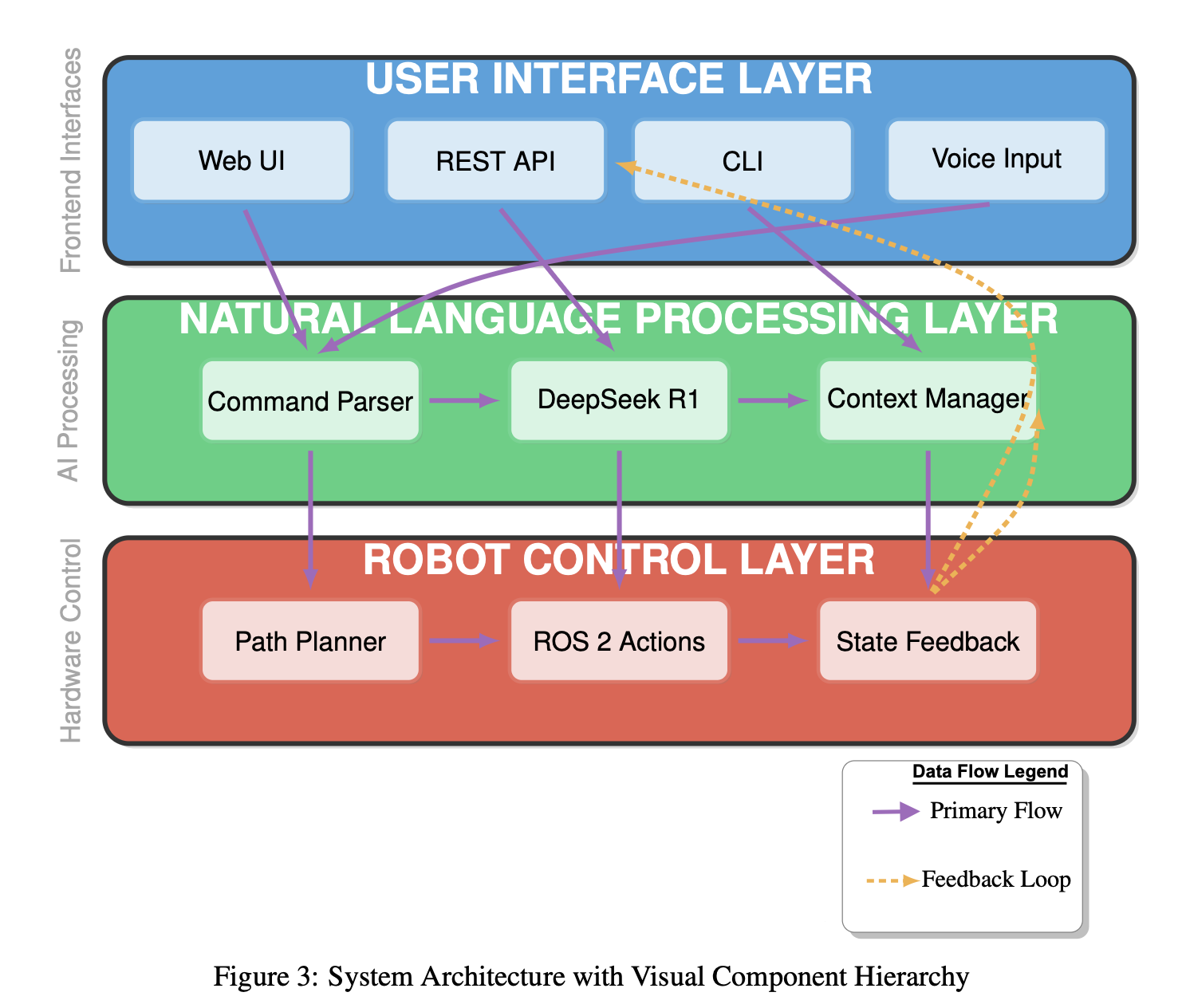

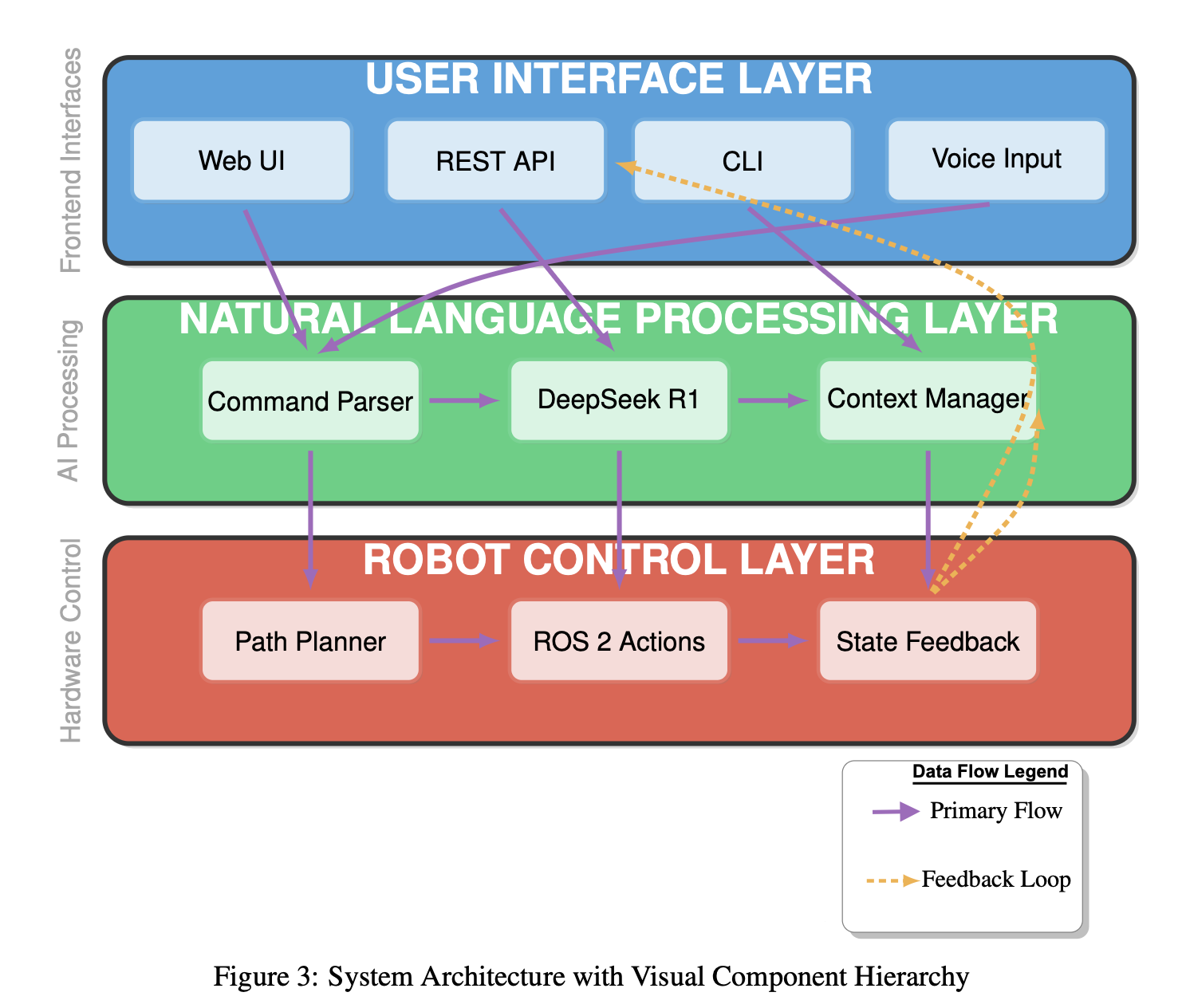

Built an LLM→ROS 2 bridge with command parser, context manager, and trajectory execution in Gazebo/MoveIt 2. Supports multi-user control with tests and CI.

I’m a Robotics/AI engineer working across perception → planning → control. I design full‑stack systems that fuse sensors, classical controls, and modern ML to deliver robust behavior in real environments.

Graduated with the Gold Medal of Academic Excellence.

Chose UPenn for an MSE in Mechanical Engineering (Mechatronics).

Transferred with UPenn approval to an MS in CS (AI focus). Building end-to-end intelligent robot pipelines.

Built an LLM→ROS 2 bridge with command parser, context manager, and trajectory execution in Gazebo/MoveIt 2. Supports multi-user control with tests and CI.

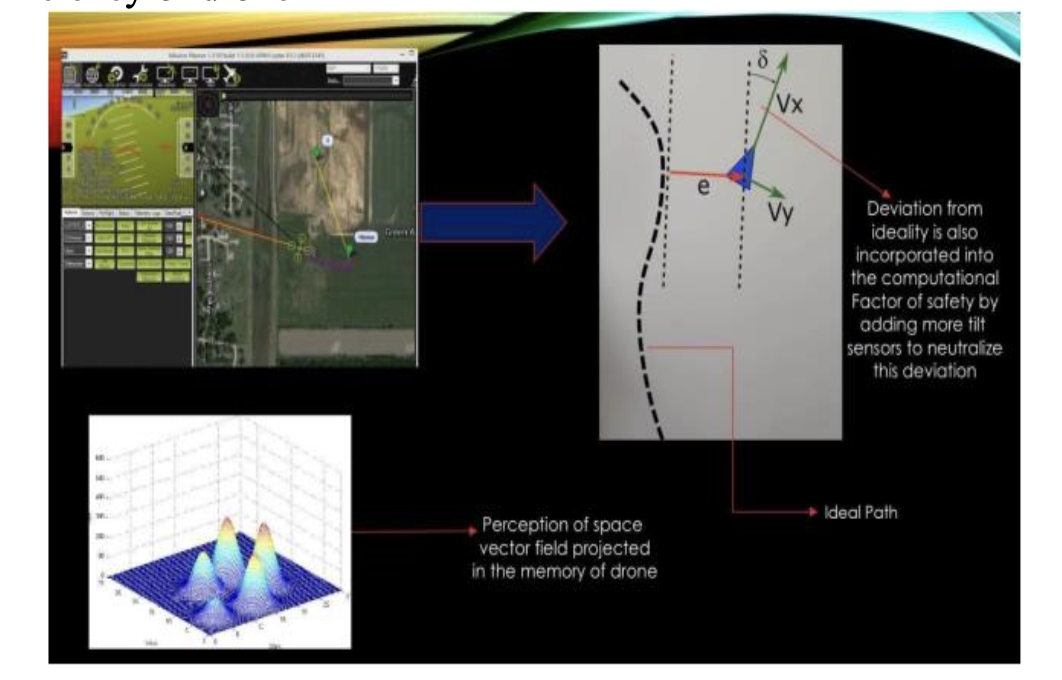

Concept + simulation of a drone that can carry payload above and below the frame with autonomous navigation.

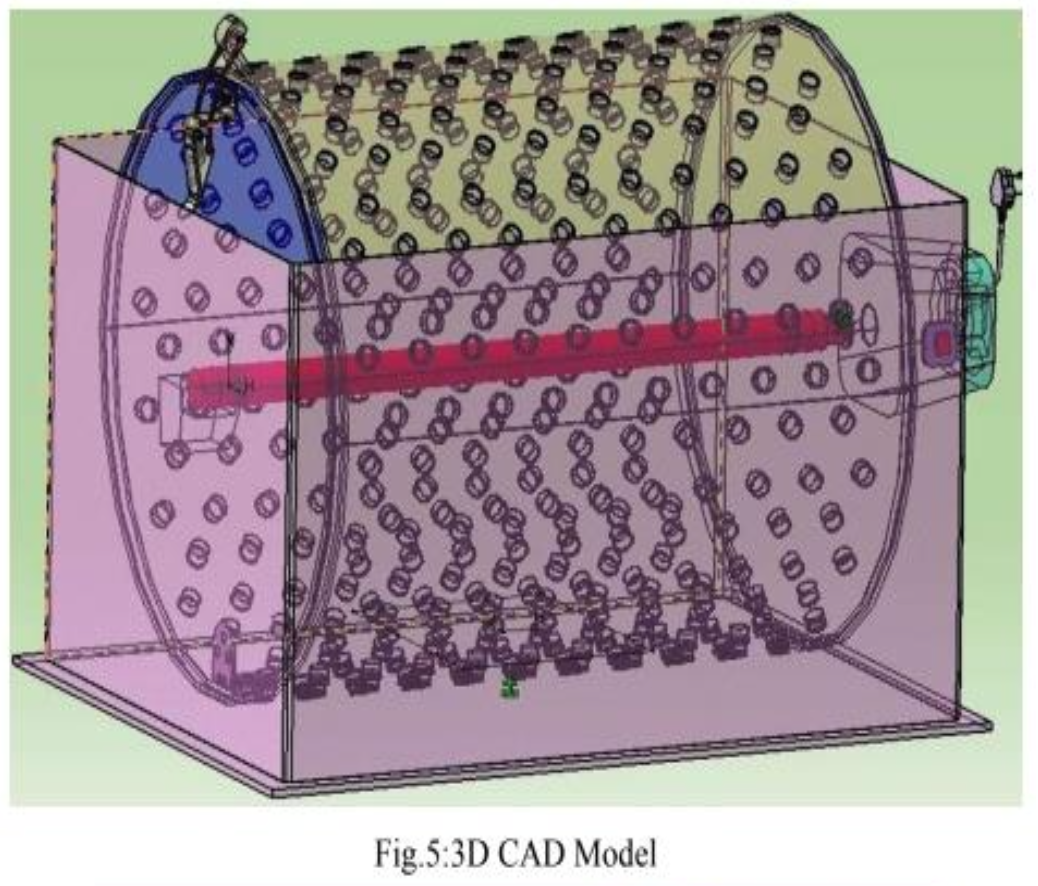

Affordable, mass‑manufacturable descaling system — perforated stainless drum, strap drive, and wash cycle.

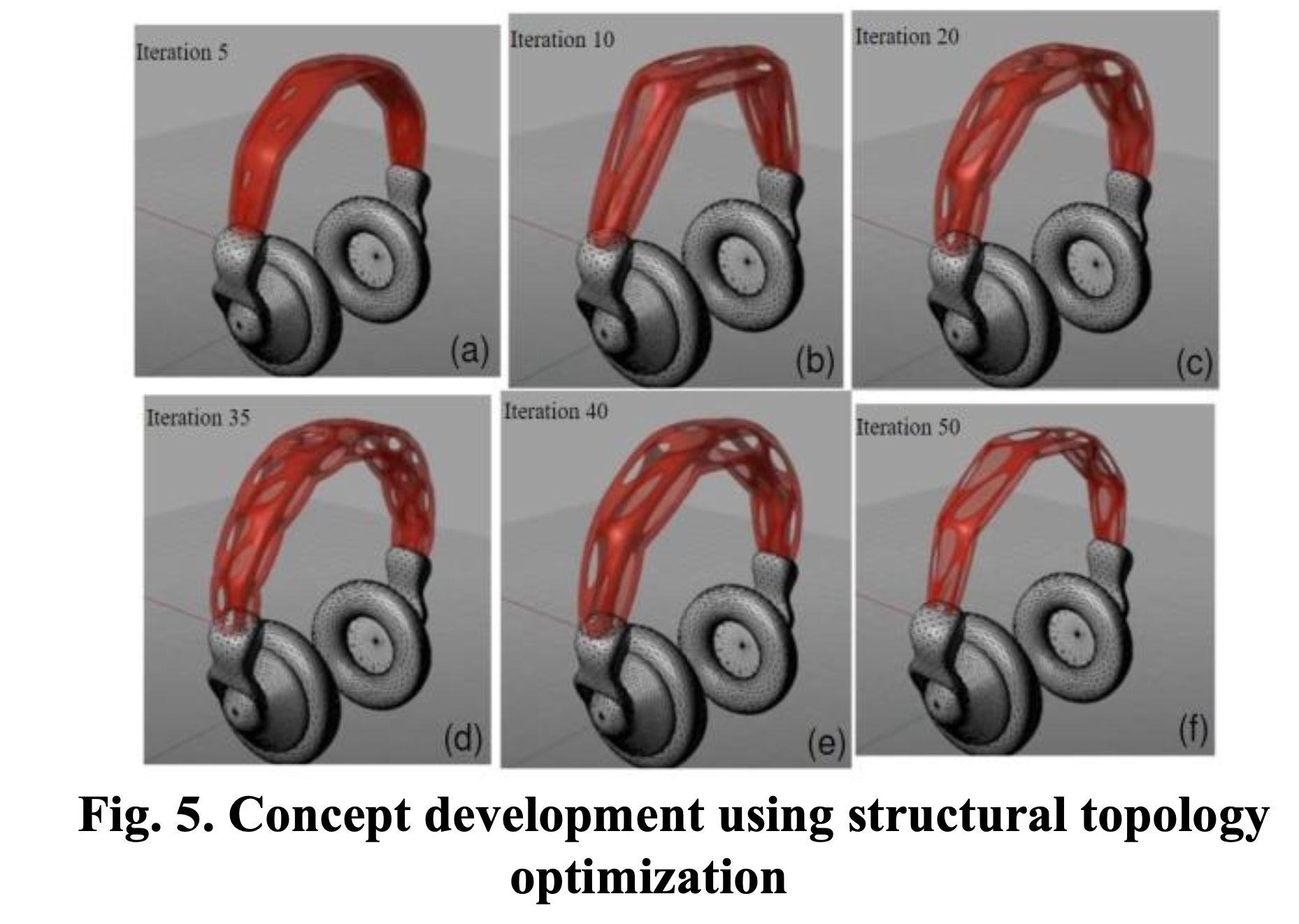

Iterative stiffness‑to‑mass optimization with OptiStruct/Altair — design space reduction and manufacturable forms.

Perception → planning → control for a Fetch mobile manipulator; reliability and autonomy improvements.

Reaction-wheel attitude dynamics and HIL validation at ISRO’s Inertial Systems Unit (IISU).

Robotic welding optimization with feedback control and computer vision quality checks.

Structural/vibration analysis for a bouncing drop on a vibrating bath experimental setup; analytical models (SDOF→2-DOF→MDOF) and MATLAB/Python tooling.

A compact Climate–Economy–Population system-dynamics model upgraded with a two-reservoir carbon cycle, two-layer energy balance, and an abatement policy module (MAC curve). Implements parity between Insight Maker and Python for V&V, plus light calibration and policy search.

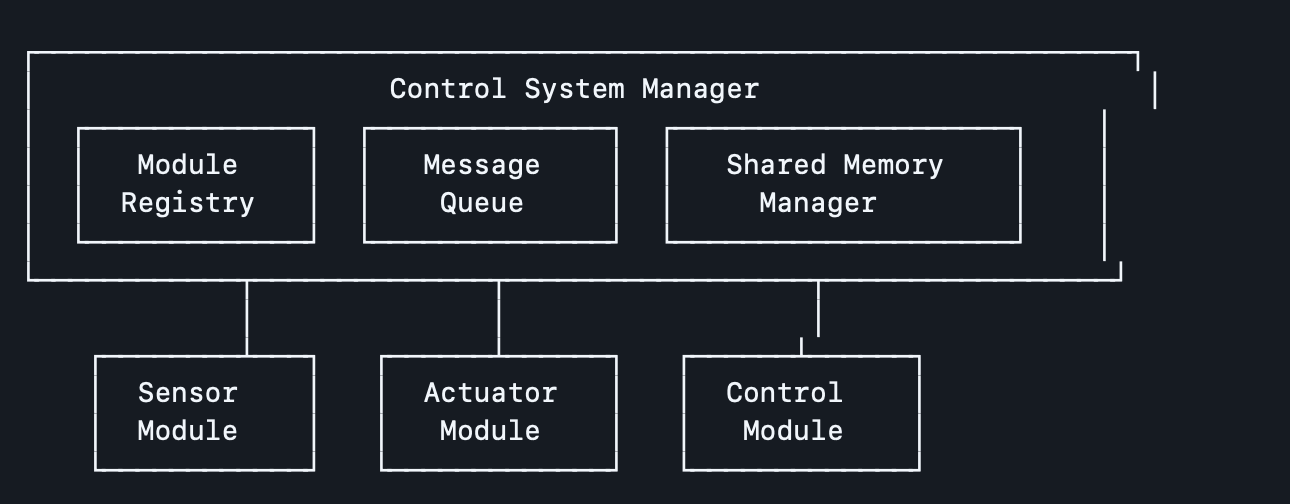

Modular real-time control framework with lock-free IPC, zero-copy shared memory, and runtime plug-in modules for sensors, actuators, and control loops.

A lightweight HTML5-Canvas app that teaches the greatest common divisor visually. It compares two fixed segments (36 in and 10 in) using a movable probe segment (1 in or 2 in) and shows when both divide cleanly. Built for clarity on phones and desktops with crisp tick marks, animation, and guided steps.

The visualization encodes the number-theory fact that any common divisor of 36 and 10 must divide their GCD, which is 2. Hence, only a 1″ or 2″ probe can land cleanly on both scales—making the GCD obvious at a glance.

One‑page PDF tuned for robotics/AI roles.

Email soutrik.viratech@gmail.com or message on LinkedIn.